The Perfect Sync

In a previous article, I detailed my awkward experience with Windows Home Server and setting up a stable FTP server. In the end, I converted it into an XP server and assigned my clients with NetDrive so that they could mount the FTP server as a drive letter and browse effortlessly.

Problem is, NetDrive’s performance was subpar with large files, such as PDFs. For example, if I were to open a large PDF, instead of caching the entire PDF and reading it locally, NetDrive would proceed to download the PDF in parts much the same way it works when you view a large PDF from within a web browser. Because many of the PDFs on the server are large (100MB+), this resulted in extremely slow performance. Moreover, a client requested that the files be made available offline so that data from the server could be reviewed and edited while on a plane, for example. Dropbox’s impressive core sync engine evidently left quite an impression, and rightfully so.

I began an epic quest for the perfect realtime, bi-directional sync utility that I could use in conjunction with NetDrive to sync between a local copy of the server data and the server itself — in an effort to essentially replicate the Dropbox-like sync experience.

I must have installed over a hundred sync applications. This was an exhausting and defeating endeavor. Many sync software applications are remarkably similar and there is heavy competition among them. At one point, I had a near-perfect sync using this remarkably capable (albeit not-entirely-user-friendly) program called FTP Auto Sync which I could get working about 80% of the time. However, all of the programs were unable to handle multi-client scenarios. My server is intended to handle changes from multiple users simultaneously. So, one user may delete a file while another user adds a 10GB worth of new files somewhere else. What I was essentially asking all of these programs to do was “check whatever was done last on the server and sync it back with whatever I did last locally and figure it out.” A tall order. Problem is, a locally residing program is blind to the changes the remote FTP server is doing while offline and is unable to monitor all folders at all times anyway.

Let’s say I delete four files from the server. How is the local client to know whether to delete the files from the local client, or the sync the local files back to the server? To get around this, some programs maintain a database of changes, however, they still do not work in a multi-client environment unless I were to switch to an entirely different platform, such as iFolder. Many programs simply were unable to handle syncing in realtime between a pseudo-drive such as NetDrive altogether.

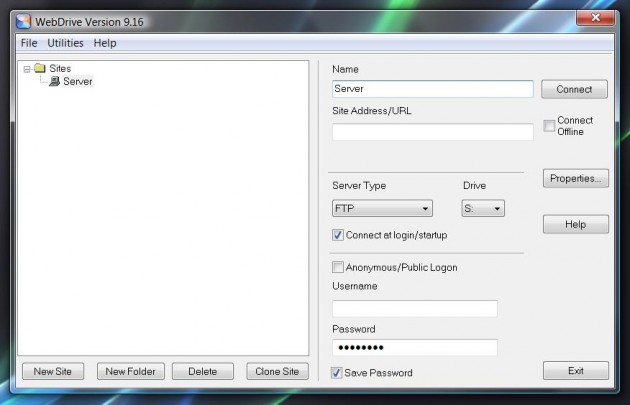

I ended uninstalling everything, including NetDrive and installing WebDrive to replace it after discovering that it had advanced caching and offline synchronization features. The main WebDrive interface may look similar to NetDrive, but the underlying technology is much more robust and feature rich.

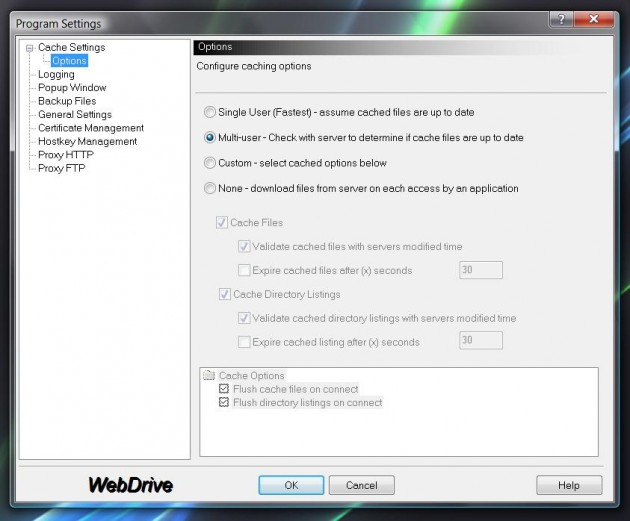

By default, WebDrive is configured for “Single User” under Cache Settings. This infers that you will be the only one affecting changes on the server. Since my server data will be changed by many users, I wanted mine to be Multi-user which makes WebDrive check the modified date/time on the server files to verify that the cached files are up to date, if they are not, it will re-download the files as necessary.

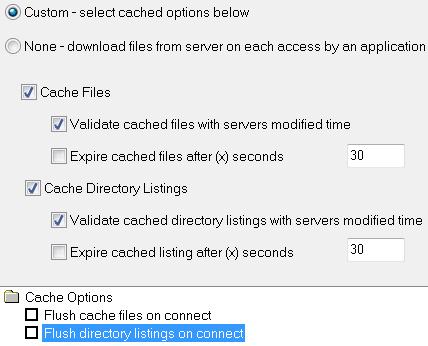

You’ll also note that on the bottom, there are two additional options for flushing the cache upon connecting. Since this is the default behavior for Multi-user, I selected Custom and unchecked these two so that the cached files would remain.

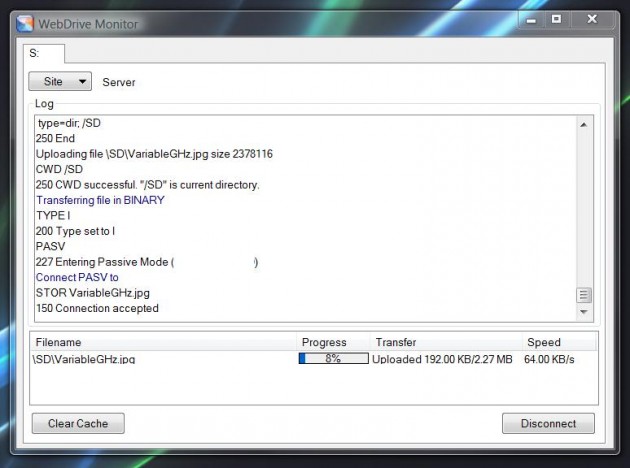

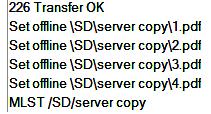

Once you have connected to your FTP server, WebDrive has a useful little tool called the WebDrive Monitor. You can access it by right clicking on the WebDrive system tray icon and selecting “Show Monitor.” The Monitor displays the raw FTP commands as they are executed between the client and server as well as file transfers, speed, and a handy progress bar. In addition, the WebDrive system tray icon flashes a world icon whenever data is being transferred. Whenever there is any kind of delay, it’s nice to be able to open up the Monitor and see what is slowing things down, or even to troubleshoot an issue on the server if necessary.

Now, to test the offline sync, I created a test folder entitled “server copy”; I placed four PDFs in the folder and selected the drive made available offline. To prepare a system to be ready for offline files unfortunately requires a few steps to be taken. Those steps can be found in complete detail here, so please refer to that PDF and read it entirely if you intend to use WebDrive for this purpose. First, I open the WebDrive program settings and check both “Synchronize Offline files at Connect time” and “Synchronize Offline files at Disconnect time” for full synchronization. With the server in “online mode” I right click on the “server copy” folder and under WebDrive> select “Make Available Offline.” Since I have the Monitor open, I can see that they are “Set offline.”

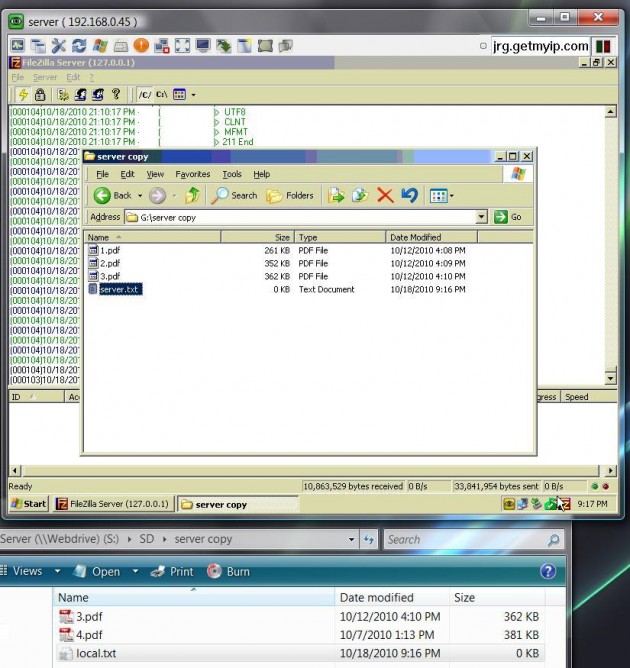

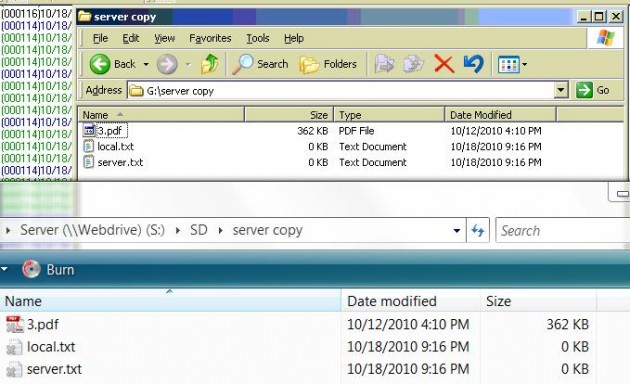

Once the files are marked for offline mode, I reconnect to the WebDrive in “offline mode” by checking the “Connect Offline” box next to the Connect button, then reconnect. Of course, you must first disconnect from the server in order to reconnect, even in offline mode. Once connected, I then VNC’d to the server unit directly and deleted 4.pdf and added a new text file called “server.txt”; on the local “offline” copy, I deleted 1.pdf and 2.pdf and added a new text file called “local.txt”

WebDrive has several ways to perform a sync: (1) you can right click on the drive letter itself and select WebDrive > Synchronize Now; (2) right click on the system tray icon, select your WebDrive and then choose Synchronize Now; and (3) simply going back into Online mode where WebDrive will automatically perform a sync on connect. I right clicked on the system tray icon and selected “Go Online” to initiate an auto-sync. The sync was near instantaneous since my files sizes were trivial.

I was hoping for a solution that would free my clients from having to right-click and generally be reminded so much that they are using a workaround for Dropbox, but without Dropbox’s core sync engine, this is as elegant as it gets. I am informed that Novell/kablink’s iFolder (server software not available for Windows) and SparkleShare (not yet available) as well as other utilities such as Unison (no longer under active development) could potentially solve my FTP sync issues as well. My quest is over, at least for now, and while it may not be entirely seamless, my clients will be given the closest thing to the perfect sync.

admin@variableghz.com

I’ve just found this little, and ugly, but very useful freeware :

http://www.allwaysync.com/

works with network drives, ldap, ftp, and also amazon s3…!!

hope you’ll tell me what you think of it.

cause for now it seems to fit my requirements !

Adrien,

AllWaySync does work very well, however, as I mentioned in this article, I was unable to use it because it couldn’t do a true bi-directional realtime sync. It can handle changes on the client side, but very poorly server-side.

Hi Samuel

I’ve read your article with very interested but I’ve read your post.

In your 2nd post you’ ve wrote about this software AllWaySync is “not very poorly server-side”.

I’ve read in web-site allwaysync.com this info:

“Implements true all-way (two-way, three-way, etc.) synchronization.”

This characteristics is true?

Bye Andrew

Andrew,

The issue is with having true bi-directional realtime sync on an FTP server with changes happening on the server-side as well as the client-side. AllWySync works fine if you plan to make changes on the client-side and have the sync’d to the server, but once you start demanding immediate real-time sync (bidirectionally!) things usually get too complex for it to handle.

If you find a way to make it work, please let me know!

Hey Samuel,

Great job on finding out a great way to get bi-directional sync. I to have tried many syncing programs to get it working just right (not hundreds, but a few dozen or so). I didn’t run into the problems with server-side file mantainance that you did with AllWaySync, the problem I did run into was it’s file limitation. I’ll keep looking for some more solutions, but I got your article bookmarked.

Frank,

Thank you for your remarks. As I am sure you noticed, there are thousands of sync programs — most of them bad. What was the file limitation of AllWaySync that you ran into? I may have to give it a try again as I’ve run into a few issues with WebDrive since posting this. From time to time, I have to “flush” the cache because the program tends to cling on to some cached files for dear life and refuses to update without flushing the cache. This hasn’t been a problem in practice, but it is likely to be a problem for a client of mine at some point.

I take great issue with inconsistent results as the intrinsic nature of computers favor stability. 1 or 0. On or off. Working or not.

Sorta-sometimes is unacceptable.

Samuel,

After the trial period on AllWaySync, it stops a profile from running if it transfered more than a few gigs already. Some forums say that ALLWaySync is great for weekly backups/syncs, but not for realtime sync like we want. I’m finding great success with the open source program Unison. But setting it up on windows was a bit of a pain (having to install a native win32 ssh program and matching it with a linux ssh server, and getting the gtk gui on windows was a pain too). But the GUI once setup works wonderfully, but it runs by command line too. I’m gonna try to write a script to continually sync it later this week. I’ll let you know how it goes. Take care and Happy New Year.

Frank,

As you mentioned, there are indeed a *lot* of programs that are excellent for backups/syncs — realtime is where things get really tricky as you have gathered. I looked into Unison, but it just seemed like too much to get working and I wanted to avoid having to deal with SSH. I’d be very curious to know how your script turns out, check in with me! =)

Samuel.

Unison is much more config heavy than I thought. I ended up installing Cygwin to get a uinux like environment. In order to get everything to work nicely I needed Cygwin to install ssh, rsync, all the gnu complier, and cron. After making Unison from source I set the default profile to include the Paths of the directories I wanted to sync. I turned on fastcheck (otherwise it’d check the entire file for changes instead of just the dates). Set anything over 10mb to transfer using rsync. And then set it to use temp folders for transfering. Unison used ssh to connect to my linux server (if you wanted to use a windows server, I imagine you’d install Cygwin on it too). And I scheduled it all with cron instead of the windows scheduler. And finally, close to realtime sync with multiple clients. Unison also has a wonderful server side file change log (but only over ssh, not FTP).

In conclusion, don’t do this. I’m sure you wouldn’t want to anyway, It’s too hard to setup and since it’s only been running for a few days, I don’t know what the cons are. About halfway through setting this up I’m starting to think of an easier way. Many of the freeware sync software supports syncing with networked computers rather than with ftp. I’m thinking a virtual lan (like hamachi or remobo) along with a network mounted drive to sync to will be much Much easier. Good luck from hear on out. If you ever find an easy way to do it, write up another article.

Samuel,

Hope you are still monitoring this.

You mention DropBox a number of times but don’t explain why it is not your preferred solution.

I have DropBox working on a small data set (1/4 gig) with three computers, but I’m loathe to put the motherlode (4 gig/100,000 files and 20,000 directories) into production.

I, too, have been searching for the same thing. I note that there are two new competitors to DropBox: wuala and spideroak. SpiderOak has the full package (end to end encryption with absolutely NO access to your data without the password – which they do not store, of course).

But SpiderOak’s comment history is quite poor. I’m always distrustful of those who hide behind downloading the software and actual registration (even if it is free) just to READ what has been posted on their forum. Wuala seems to have a problem with XP Pro machines that it can’t solve, and I still have a few of them.

Anyway, I’m curious about why you don’t just recommend DropBox?

Mike

I am indeed still monitoring this. Dropbox is a fantastic service. I had the opportunity of touring their spacious offices in San Francisco in late 2010 and meeting a few of their incredibly energetic staff. Wonderful company. Here’s the thing: for now, Dropbox only offers a free 2GB-8GB, paid 50GB or 100GB solution. For my purposes outlined in this article, I am dealing with *hundreds* of gigabytes of data (now approaching 600GB and counting) with no end in sight for the number of users I need to continue to add to the mix. So, even if I wanted to go with Dropbox, I wouldn’t be able to because their highest tier is 100GB for $20/mo. But, let’s pretend for a moment that I had less than 100GB of data. It still isn’t feasable for me to go with Dropbox because for *each* new user that needs access to the data, I would either need to: (a) convince them of the need to pay the $20/month to Dropbox; or (b) shoulder the cost on my own. At present, with over 20 users connected to my FTP server, that would be at least $400/month being paid out to Dropbox *every month*! $4,800 per year! Realistically, I am sure that Dropbox plans to include larger “Dropboxes” in the future for clients who need the space (like me), but in that case it would cost even more than the $400/month I am already worried about. Therefore, my quest for the Perfect Sync went under way. I hope this answers your question, and thank you for reading!

-Samuel, VGHz Admin

Thanks, I very much enjoy reading what you write. A breath of fresh air in the honesty department, along with it being well written.

I am well under the 100 gig limit, so my search is different from yours. Actually, I would be happy for a very long time at the 50 gig limit. In addition, I own all of the computers that would be syncing, so I only need 1 account. WebDrive has a cost per seat, so it is more expensive.

My reluctance with DropBox is limited solely to the fact that they have less than perfect security. My account password is known to them, and with that, they can access any file, even though it is actually encrypted on their servers. Their forum has many messages which highlight the security hole and while I’m not worried about the government finding anything of interest, I am worried about my clients saying I have played fast and loose with their sensitive information (even file names may be too much information).

Do you have any experience with SpiderOak? The reviews are universally horrible, but things change very fast in this area and most of the negative comments are more than a year old. No new negative comments may just be a result of them not having much new business (due to the negative comments) or it may be a result of them getting their act together.

I have a few questions about WebDrive, though, as I haven’t completely ruled it out:

1) They have a GroupDrive product which appears to be little more than what you have described above. Yes, it has a few bells and whistles not included in WebDrive, but hardly worth the extra expense for a small enterprise like mine.

2) Once the drive letter is assigned, does the WebDav implementation allow the “subst” command? For example, assume a drive S: has been created and I have two directories s:\dir1 and s:\dir2. If I run a “subst x: s:\dir1” command will it allow access to files in the s:\dir1 directory via x:\? I assume it will.

3) I am mostly concerned with speed. I actually WANT the entirety of the synced information to reside in the cache so that local access is immediate. With the cost of local storage these days being so low I don’t see this as a problem. Do you see any issues with a cache of 3 or 4 gig on a drive with 250 unused gig?

I see you are a night owl, like me.

Thanks for the response.

Mike

Mike,

I very much appreciate your comments. Allow me to address some issues you have raised now that I better understand your situation. First off, I completely understand your need for security and privacy for your clients. In fact, the data I am now hosting on my private FTP server is confidential and sensitive information in a federal criminal matter. So, that was another (lesser) reason for my switch to having the data completely under our control, rather than having a third party such as Dropbox involved.

I do not have any experience with SpiderOak, I think I’ll check that out.

1) Never heard of GroupDrive either. I’ll check that out.

2) I just went ahead and confirmed the subst command does work with WebDrive. I’ve never heard of that command. I map network drives all the time, but that’s a nice command line utility there — thanks for the tip!

3) I do see a problem with caching that much data. Since I wrote this article, WebDrive was rolled out on my client’s machines, and I noticed that it had a few bugs here and there with caching. Sometimes I need to “flush cache” in order for it to register new folders that are created server side. This worries me because I cannot expect my clients to be savvy enough to think “oh hey, maybe I should right click and look for an obscure ‘flush cache’ feature to make things work.” Nope, instead, they’ll just call me saying “the server deleted my data.” So, I imagine this being even worse with several gigs under WebDrive’s auspices.

Having said all that, have you considered trying to use the Windows Vista/7 Sync Center/”Manage Offline Files”? In theory it should be perfect for our solutions, however, as I noted in my latest article, it’s something of a mess in Windows 7 by my testing and observations. Keep me updated with your progress if you’d be so inclined. =)

Thank you for taking the time to respond. It is appreciated.

There is only one item that I’d really appreciate a response to. As far as the “flush cache” command goes, I would have no trouble training those who work on my computers to right click and select the command when that is appropriate. But my concern is speed. Does the command erase the entirety of the cache and then download the entire thing again (ouch) or it is more like an on command forced sync which checks for new or deleted files and makes sure everything is up to date. The former would take hours before the files were again usable, while the latter might take mere seconds (although I would certainly settle for 3-5 minutes if it wasn’t terribly frequent). This is a huge issue for me, and, in fact, the only thing in this long response that I’m actually asking for a response to.

But now that the request portion of the response is noted…….

I can’t find much positive on SpiderOak on the web. Shame, too, because they have, hands down, the best feature set. To top it off, after signing up for the free service, I was sent one of those “now that you are familiar” with our service emails after about 3 days, offering me a whopping 10% discount if I signed up for the pay version ON THAT DAY ONLY. Tacky, tacky, tacky. There is even a comparison chart posted on DropBox that shows, of the 4 services compared, that SpiderOak has the richest feature set and that some of the SpiderOak features that aren’t native to DropBox have DropBox workarounds available. It can be found, currently, at: https://www.dropbox.com/s/hqfrredz2253sq0/Cloud%20Comparison.pdf

If you end up having the time to check out GroupDrive and feel like posting a response here, that would be appreciated.

Well, with the subst command working, I am very close to sampling WebDrive. When the first PC operating system you used was good old DOS, you get spoiled doing certain things the old fashioned way. :-) The subst command is the local version of map network drive. I have many (thousands of) Word documents and Excel files that rely on feeder files, all of which are hard coded with a subst drive (x:), because WOrd and Excel just don’t let you specify a feeder file without specifying the drive letter. Layering sync on top of that is, shall we say, challenging. If the underlying files are on another computer, I map it to x:. If they are on the computer doing the work, I subst the drive x to the proper directory. As I’m sure you can appreciate, it is also great at testing on a single computer a system which will eventually end up involving network drives. So, you can, while working on that BIG PROJECT, do so on your laptop at the pool and seriously be working on the project… well, sort of….

As far as Windows Vista/7 goes, I know this will make me sound like a Luddite, but all of my machines are XP (except my wife’s new laptop) and I’m likely to retire before they become obsolete for doing the work I do. So, I’m trying desperately not to learn as much about 7 as I know about XP (my wife is determined to scuttle that, though). But to more directly respond to your question, even if I had a battery of Windows 7 machines, it is hard for me to imagine that Microsoft would design anything serviceable for a small enterprise like mine. I’ve been down that path with many different technologies and the ones that emanate from Microsoft are invariably bloatware, resource hogs and temperamental. Other than that, I’m sure it would work just swell.

I will, of course, update you when and if a milestone of any kind is passed.

Thanks for the dialogue.

Samuel, you can forget the GroupDrive product. It is described as a “suite” of products, the first of which is a Windows Server replacement for the FTP server you describe. Unless you are running your FTP server on a Windows Server machine, it would require you go out and get another machine. If I ever go the Windows Server route, it looks like it might be worth exploring.

One more question: You seem to have FileZilla configured for FTP. Any reason not to use it in SFTP mode?

Thanks, again.

Just thought I’d check back in and see if you have any thoughts to my comments #14 and #15, above.

Hope all is well with you.

Mike —

Unfortunately, the flush cache does indeed clear *everything* and does not simply initiate a force sync. Ouch is the right word for this situation.

I am using FileZilla in FTPS mode, which is different than SFTP. SFTP is basically SSH, which requires a convoluted series of steps to have compatibility working (with something like CopSSH, Cygwin, multiple Windows accounts for each login to correspond w/ the SSH) — so I say forget that. I went with FTPS (implicit) which is incredibly simple to set up. I configured it to only encrypt the login credentials to prevent password sniffing in the event that one of my clients uses a public wifi. I didn’t bother with encrypted the data stream since the data itself isn’t *that* big of a deal and would likely bore someone to death who is not familiar with our cases. So — FTPS is the way to go with FileZilla, not SFTP. IMHO.

Have you looked into LogMeIn’s Hamachi2? I might look into that once my FTP server starts having problems or gettin’ slow, or any other excuse!

:)

Thanks for the response.

I have found that no matter the distributed structure, whether it is VPN or WebDav, the fact is that opening (downloading) and saving (uploading) files is slower than molasses running uphill in the wintertime. I have tried OpenVPN, Hamachi (not Hamachi2) and others of that ilk (Leaf, Remobo, Wippien, etc.) a few years ago and just found the speed and reliability to be unacceptable.

The only thing that makes sense for me is the kind of distributed file structure that the cache you were describing allows and the kind of updating that Dropbox provides.

I *think* I have found the solution I’m looking for. There are now at least two add-ons to DropBox that provide true encryption (and therefore completely defeat DropBox’s de-duplication “feature”) on the user’s machine on a file by file basis. TrueCrypt does that wonderfully on an aggregated data set, but falls short on a file by file basis.

The two solutions I’ve seen are SecretSync and BoxCryptor. SecretSync just feels like it is more my style than BoxCryptor, so I’m going to try to do a test this weekend to see if it really works. As you might expect, it demands that each computer have two copies of each file, an unencrypted version in the SecretSync directory and an encrypted file in the DropBox folder. SecretSync monitors both the DropBox folder for changes, in which case it decrypts the file into the SecretSync folder and the SecretSync folder for changes, in which case it encrypts the file to the DropBox folder (and after that, DropBox takes over and copies it to other DropBox’s).

Seems like it is exactly what I need to plug all the security holes in DropBox.

It completely disables de-duplication, which doesn’t bother me, but might, at some point bother DropBox if *everybody* ends up using it. It also effectively eliminates the ability to pull a file off the cloud while away from home/office which is a feature I don’t have a need for, anyway.

There is no reason why the SecretSync folder can’t be located within a TrueCrypt volume, with the only warning being that one would have to ensure that SecretSync is told to stand down unless or until the TrueCrypt volume is mounted (otherwise, in the blink of an eye, I can see Secret Sync thinking all of your files have been deleted – truly a “bad thing” as it then deletes the files from your DropBox folder and then, by extension, DropBox deletes all of your files from every other DropBox).

Wish me luck and thanks for the feedback.

Hi

The last post is quite a while ago, but I have to ask one question here!

I’m trying webdrive right now and use offline caching! It works perfect except one thing!

When I’m deleting a file localy in offline mode, the file comes back being downloaded from

the server! It is now deleted on the server like I want it!

The same with folders:

1) I’m in offline mode (working within the cache)

2) I delete a file/folder from the webdrive(cache)

3) I sync or go to online mode

4) the file/folder gets downloaded from the server again instead of being deleted from

the server

Any ideas

Using windows 7 (64bit) & webdrive 10.2 (64bit)

Thank you in advance!